"Machine Unlearning in 2024" - An Overview

Summary and commentary on Ken Liu's post from May, and other updates

I’m never quite sure how to start any of these blog posts. For one thing, unlike in creative writing, my goal is to be as concise as possible. I’m also conveying largely factual information, which should be clearly delineated and separated from my own opinion. There’s no need to immerse my readers in a meticulously laid out universe. Adding adjectives to any other person’s words, such as by saying “Sam Altman said sheepishly” instead of “Sam Altman said,” would be effectively coloring those words with my opinion. Personally, I think that is not only unnecessarily wordy, but also diminishes the writer’s reliability. At the same time however, writing a blog post, even the selection of quotes and screenshots I provide, is inherently an exercise of my own opinion.

Of course, not knowing how to properly start a blog post is not why I didn’t post anything in May. It turns out the post I was planning was more involved than anticipated, and ended up changing direction midway through planning. I’m still working on that post, but until that gets published, I’ll try to stick to the monthly schedule with other, maybe shorter content.

Today’s shorter content is an overview of another overview, Ken Liu’s post on “Machine Unlearning in 2024”. Most of this will be a summary of the content, with some additional commentary and critique primarily in the last section.

For the rest of this blog post, if I use an unattributed quote, it is a direct quote from Ken’s post. Other quotes will be directly attributed to another source.

What is Machine Unlearning?

Machine unlearning, broadly speaking, seeks to modify a target model into an unlearned model that “behaves like” a retrained model, which is algorithmically identical to the target model and “trained on the same data as the target model, minus the information to be unlearned.”

Beyond being intellectually interesting and being part of efforts to address model safety (e.g. forgetting the concept of child porn) and nonconsensual data usage, machine unlearning is also strongly motivated by laws. In 2014, the EU published Article 17 of the General Data Protection Regulation (GDPR), which “basically says a user has the right to request deletion of their data from a service provider (e.g. deleting your Gmail account).” This is easy when data is stored discretely, such as with accounts, but significantly harder with machine learning, in which both what data is used and how said data contributed to the final model is unclear.

In this context, the author chooses to split machine unlearning motivations into two categories: access revocation, and model correction & editing. Access revocation is about unlearning private and copyrighted data, while model correction and editing centers trying to unlearn “bad data” at large. While it may seem that access revocation targets unlearning individual training examples, and model correction seeks to address broader concepts; this is not necessarily true. For example, the Dune franchise is copyrighted, but also popular enough that it would be basically impossible to remove everything about Dune, or inspired by Dune, from training data on a per-example basis. If, theoretically, the owners of the Dune franchise request access revocation of their data, model owners would likely need to treat Dune as a broader concept.

Machine Unlearning Methods

In Ken’s post, he splits machine unlearning methods into 5 categories, roughly decreasing in terms of theoretical guarantee

Exact Unlearning

Of these, only exact unlearning provably exactly matches the retrained model. As a reminder, the retrained model is the model that is trained on all previous training data minus the data to be unlearned. To be more precise, exact unlearning on the target model (defined in the previous section) produces models that are “distributionally identical” to the retrained model. That means, under the same random model initialization and other randomness, exact unlearning on the target model produces the exact same model as the retrained model.

Exact unlearning methods are few and seem to all use a divide-and-conquer strategy. Both papers Ken references group training data into small groups prior to model training. The first paper from Cao & Yang transforms individual groups of data, before training a model on each group, which is significantly more efficient than training on all individual data points. The second paper trains a separate model for each smaller data group, and aggregates those smaller models for the final model. In each case. Ken notes that, in today’s ML landscape, which favors data-heavy approaches, these methods would be disfavored. However, he argues in favor of revisiting data-sharding techniques “in light of the recent model merging literature,” which I agree with.

Differential Privacy

Unlearning via Differential Privacy is most robust inexact method. At a high level, differential privacy says “if the model behaves more or less the same with or without any particular data point, then there’s nothing we need to unlearn from that data point.” The primary procedure used in this approach is DP-SGD, which:

Clips the L2-norm of the per-example gradients, and

Injects per-coordinate Gaussian noise to the gradients

The idea is that the noise masks the contribution of any individual example, so the final model is not sensitive to any individual examples.

While DP-based methods have statistical guarantees, those are usually under certain conditions that may not be met, such as only working with convex loss functions. There could also be practical limitations, like being difficult to audit: how can auditors know that you removed an example if the model with the example removed is, by design, not distinguishable from the model with the example?

Empirical Unlearning Methods

The previous two categories consisted of methods for which we “know” the retrained model without having to explicitly train it. In the first category, the unlearned model is mathematically identical to the retrained model. In the second, DP ensures that the unlearned model is “similar” to the retrained model. Starting from this category, however, researchers and practitioners no longer know what the retrained model is or how it should behave, unless they explicitly train it themselves. In other words, we lose any mathematical or statistical guarantees about closeness to the retrained model.

That isn’t to say that these methods are strictly “worse.” They show qualitative differences, and address models that aren’t covered by the limiting assumptions of DP or are too big to exactly unlearn.

Empirical unlearning methods could be split into cases in which we know which training examples to unlearn, and cases in which we don’t. Methods in the first case can largely be summarized as “training to unlearn” or “unlearning via fine-tuning.” Examples include:

“Gradient ascent on the forget set”

“Gradient descent on the retain set (and hope that catastrophic forgetting takes care of unlearning)”

“Add some noise to the weights”

“Minimize KL divergence on outputs between unlearned model and original model on the retain set” (remember, we still know the exact training data to forget and the exact training data to retain)

And others!

Most empirical unlearning systems use some combination of the listed methods.

When we don’t know which training examples to unlearn, and instead want to unlearn something broader, we use the second category of empirical unlearning methods. These cover “model editing” and “concept editing” techniques, such as MEMIT.

As with MEMIT, unlearning requests can use examples indicating what to unlearn, but those examples “can manifest in the (pre-)training set in many different forms.” One example would be the Dune example provided earlier. Another example would be trying to unlearn “Biden is the US president,” which is embedded in lots of data in many different forms, and whose implications influence more data still.

Other techniques in this area use techniques from the first category of empirical unlearning such as gradient ascent, but with externally found examples, such as those generated by GPT-4, instead of actual training examples. Other techniques in this area are based on task vectors, and the general idea of trying to figure out what gradients “bad” data have and undoing those.

Prompting the Model

This category of methods, which only works on instruction-finetuned LLMs (nevertheless covering a large number of the most salient models today), can also be known as “guardrails.” Using a combination of prompting and response filtering, it turns out these methods can be as good as other unlearning methods on standard benchmarks.

Prompting techniques include literally asking the model to forget, which tends to work better with common knowledge. In case something isn’t common knowledge, few-shot prompting, in which the model is given a few examples of what to forget and a response to give instead (for example, “what color is Joe Biden’s cat?” “white” — my own example) as well as responses to keep correct (for example, “what is Joe Biden’s cat’s name?” “Willow”) can work.

Evaluation

In my last blog post, I described the evaluation metrics used on MEMIT (an empirical unlearning method based on unknown training examples):

By expanding upon these, we get a high-level idea of what we care about when evaluating any unlearning method. Ken writes these metrics as follows:

“Specificity” or “neighborhood success” in the first image corresponds to “model utility” in Ken’s post, while “efficacy” and “paraphrase success” are both part of “forgetting quality.” Efficiency is not covered, which makes sense, because it’s a little more meta: it helps us evaluate whether the unlearning method is worth doing.

Unfortunately, there’s a dearth of datasets and benchmarks with which to compare unlearning methods. Luckily, some new benchmarks have come out in 2024, including TOFU and WMDP. TOFU focuses on unlearning individual, specifically fake book authors, while WMDP centers unlearning dangerous knowledge such as that around biosecurity.

Discussion and Commentary

In the final section of Ken’s post, he covers a number of practical considerations surrounding machine unlearning, as well as how it interacts in practice with other AI research directions. Ken covers four subjects: the challenges of intuiting what is difficult to unlearn, the copyright landscape, RAG as an alternative, and AI safety.

While I agree with his assessment of unlearning difficulty being difficult to intuit, I have some disagreements around his other considerations.

In this section, Ken points out correctly that the question of whether model training is fair use is still up and open. He also points out that there are approaches to addressing access revocation that aren’t purely technical, such as “legally binding auditing procedures/mechanisms” that require exact unlearning or other guarantees from model creators.

One thing he mentions in relation to this data markets, “where data owners are properly compensated so they won’t want to request unlearning in the first place.” While I agree that data owners can and should be compensated and their consent respected, “proper [compensation]” will not forestall all requests for unlearning. In a market, if the potential customer of data is unwilling to pay the data owner’s price for their data, the data owner would still request unlearning.

Going beyond this, I think that ideally, consent is granted first before any training happens. This runs in direct contrast to Ken’s opinion, in which he says “In an ideal world, data should be thought of as “borrowed” (possibly unpermittedly) and thus can be “returned”” (emphasis mine).

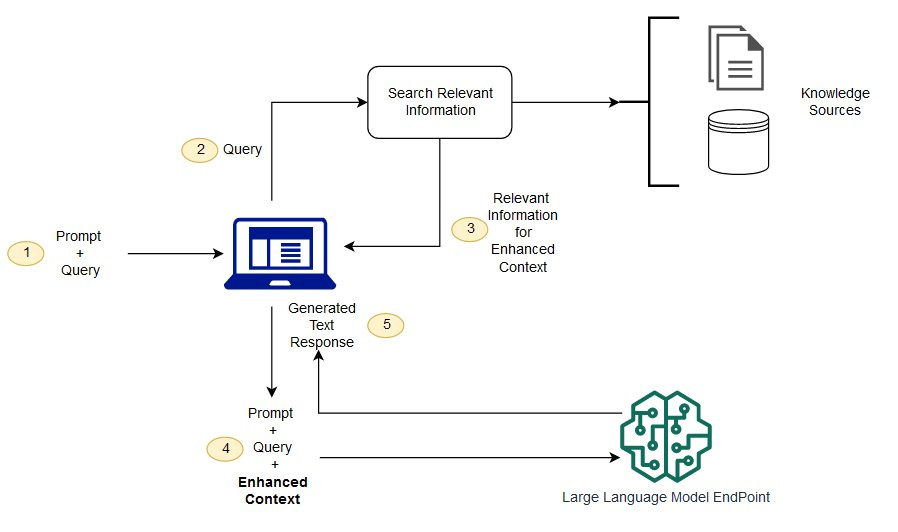

In the next subsection, Ken states that RAG could be used as an alternative to unlearning, according to Min et al. By not including potentially problematic data in the LLM’s training set and instead isolating it in an external data store that would only be accessed in a RAG context, unlearning simply turns into the process of removing whatever problematic data was in the data store.

While I agree with Ken’s existing considerations of the limitations of the system, I also believe they’re not comprehensive when considering such a system in practice. This is what a RAG system looks like:

In Min et al’s solution, the RAG system creator also trains the LLM component, in order to control its training data. This could be computationally infeasible for many companies and organizations. Barring this, these groups could select an out-of-the-box LLM trained only on low-risk data, but it is unclear if any existing, accessible models fulfill this category.

If RAG system developers instead opt for using an LLM that was likely trained on copyrighted and other potentially problematic data, then the system remains unsafe even if the problematic data is removed from the data store. This is because RAG systems still hallucinate. As long as the problematic data has been used to train the LLM, that data could still surface via hallucination, even if none of the links to retrieve contain said data.

Finally, while I agree with Ken that machine unlearning could help with AI safety, I still broadly believe that AI safety is not a model property. More concretely, let’s consider Ken’s statement that machine unlearning could be used to “[remove] manipulative behaviors, such as the ability to perform unethical persuasions or deception.” One example of something manipulative is a phishing email. As Arvind and Sayash point out in their linked post, “phishing emails are just regular emails…What is malicious is the content of the [linked] webpage or the attachment [in the email].” However, the LLM does not have access to these links or attachments when it is asked to generate the body of an email. As a result, we cannot remove this manipulative behavior, Arvind and Sayash argue, unless we “make [the LLM] refuse to generate emails” entirely. As a result, I think model unlearning may be less important for overall AI safety than Ken suggests.

Overall, I learned a lot through this post and urge anyone interested in diving further to read it in its entirety. Let me know what you think!