Right around the start of this year, thanks to LAION’s generous offer of hardware and compute, I started working on a formal model editing library for people working outside model editing and interpretability circles. The original idea for this library was presented in this earlier blog post. Since then, the idea has evolved through my own work as well as valuable feedback from Huu Nguyen of Ontocord. I am still continuing to work on the project, albeit fairly slowly due to my job and other responsibilities. Here’s a quick update on what has happened, what has changed, and what I’m working on now!

Easy Model Editing: Design and Components

The idea for this library begins with one principle: simplicity. Above anything else, I want this library to be extremely simple to use in its intended use case, much like inference with HuggingFace models as demonstrated in model cards, such as this one.

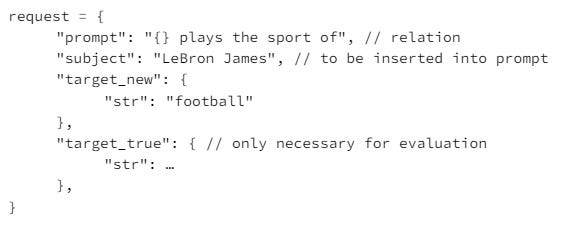

Just like in these model cards, I laid out my library’s desired final usage before writing a single line of code:

The intended users of my library are programmers and hobbyists who work with “standard” LLM pipelines. This means that these people already have data processing, training, finetuning, and evaluation pipelines, but less custom scaffolding for research or especially bespoke applications. And what they almost certainly don’t have is a reliable way of extracting facts from their data, so they could use model editing instead of finetuning to update their models. They also don’t have a way to evaluate edit quality. So the brunt of my library work will be centered around building requests for model editing, and evaluating the edited model simply.

As a tradeoff for simplicity, the library will have certain limitations. It’ll likely be extremely narrow: if generalizing functionality would require exposing more complexity or extra work from the user, I would just not include that functionality. Another benefit of keeping a minimal library is greater ease of maintenance.

My full design document can be found here.

Current Progress

What I’ve done so far includes building the fundamental components of evaluation, filtering redundant requests, and extracting relations at the sentence level. I’ll cover the first two here, and go in depth into the last feature in the next section.

MEMIT and Specificity+ evaluate edit quality with 3 metrics:

Efficacy (% edits that took effect)

Paraphrase Success (% of edits that still take effect when paraphrasing the model’s input)

Neighborhood Success

In MEMIT, this is called “specificity,” and is defined as the probability the model “assigns to the correct answer…(instead of [the new answer specified in the edit request]), given prompts about distinct but semantically-related subjects”

More generally, we can use Neighborhood KL divergence, which compares the KL divergence between next token distributions for the model before and after editing

Currently, in the MEMIT repo, paraphrases and neighborhood sentences used in these metrics are pre-specified within the datasets. However, the vast majority of folks working with ML don’t have a pipeline or procedure set up to create these sentences for their own data. So I looked to build that pipeline myself.

To do this, I looked into how the researchers behind the CounterFact dataset originally built their paraphrase and neighborhood sentences, and used that inform methods that could automatically generate these sentences for arbitrary relations. To generate paraphrases, I first search ParaRel, a dataset of 328 paraphrases of 38 relations, and optionally use the Parrot library to use AI to generate more paraphrases. For neighborhood sentences, I search the Wikidata data graph. Given a request with this format:

the feature searches Wikidata for facts with the same target_true and prompt, but different subjects.

With these methods, users can now apply the full suite of MEMIT evaluation metrics on any request data.

The other feature for which I’ve built an initial version is the request filtering feature. To save compute and minimize the change in model weights, we only want to edit relations that aren’t already “present” in the model. Originally, I wanted to inspect model internals to figure out whether the model considered a relation “true,” but decided that using output probability as a heuristic is significantly easier and sufficient. After all, MEMIT just minimizes the probability of the “true” relation while maximizing the probability of the new relation. Based on that, the filtering process is very simple:

For each request

If the model greedily generates the target when provided the subject and the relation, remove that request from consideration

I am still working on making the removal robust to semantic equivalent rewordings of the target. I’m also thinking about implementing a request deduplication process, in which semantically equivalent requests are removed as well, and efficiently, but that’ll probably not go into the first version of the library.

Extracting Relations from Text

The meat of this project is relation extraction from unstructured, untokenized text datasets. Most people training or tuning models don’t have a pipeline for extracting facts from documents, but these relations are key to using MEMIT.

After discussing this with Huu, I decided to not use document relation extraction methods, which almost certainly requires me to convert yet another repo into a usable library and host an ML model in the cloud. Instead, I’ll be using a sentence-level method: spaCy. SpaCy pipelines come with dependency parsing capabilities that can robustly determine relationships between different phrases and words within a single sentence. This would be able to extract subjects, relations, and objects; allowing us to build the request object used by MEMIT.

Once this was decided, the next step was to make sure the extracted parts were any good. To do this, I tried to replicate the CounterFact dataset using my spaCy-based subject and target extraction methods. The full colab notebook, including far more details about my methodology, is here.

I quickly realized that the naïve method of getting the “subject” and “object” noun chunks was not very good. A lot of “sentences” in CounterFact aren’t actually grammatical sentences, so often there was no subject or no object. For instance, all of these were relations within the CounterFact dataset:

Autobianchi, from Fiat

Alfred Savoir, a native French

Banaskantha district, in Asia

and other relations had their target or subject not even included in spaCy’s noun chunks. Because of these limitations, I decided to work at the word-level, instead of the chunk level, and expanded my search beyond just “subj” and “obj” dependency types. These shifts and a few others allowed me to up both the subject and target accuracies to just under 90% (strategy 4 in the notebook). After removing relations involving more than one sentence and reworking target detection, that accuracy bumps up to just over 90% (strategy 5).

The most recent improvements I’ve made were treating noun phrases and proper noun phrases differently, and adding a hack to proper noun identification. From observing many samples, I noticed that a sentence like “"Max Weber works in the field of history” usually had “history” as its target, but a sentence like “Max Weber works on the Annals of History” would have “Annals of History” as its target. The difference was that proper nouns were included as phrases, while nouns were included without their full chunks or phrases. As a result, I chose to handle regular nouns and proper nouns differently.

I also noticed that many things I knew were proper nouns weren’t even identified as nouns by the pipeline. For example, the pipeline identifies “Fischertechnik” in “Fischertechnik was created in Germany” as an adverb. To handle this, I would as a last resort, if no nouns or clauses acting as nouns were found, identify proper nouns via first letter capitalization. These changes brought me to strategy 6, which got 92.9% accuracy identifying subjects and 97.6% accuracy identifying targets across the entirety of CounterFact.

Next Steps

Currently, my goal is to get a fairly robust, end-to-end system working according to the API in the “Design and Components” section. This still requires the following:

Wrapping the MEMIT editing function to match the desired API

Refactoring the Specificity+ repo’s evaluation methods to match the desired API

And while the next tasks are not technically “required,” they are likely necessary for making the library a usable quality. Some version of these features will more likely than not be incorporated into the library.

Extractive summarization, e.g. via sumy, to get key sentences from documents

Efficiency improvements

Batching gradient descent steps in MEMIT, as suggested in Appendix B of the paper

Ensuring that I use spaCy tools designed for large-scale processing, such as `nlp.pipe`

More to come

As for work that’s tangential to the library itself, I fully intend to set up CI for my fork. Furthermore, I still need to clean up the repo. Most of the existing code is experiment code from Specificity+, a body of research on detecting editing failures in models based on MEMIT. All of the experiment code will be removed from the final result.

Once I have a first version out, I’ll share this with friends and colleagues and continue building on the feedback I get. Hopefully, someone finds this neat and useful, and we can inch ever slightly closer to a future of transparent and explainable AI.

Reading List

I recently finished reading TransformerFAM, a paper from Google that introduces a new feedback mechanism that improves information propagation over indefinitely long text length. It’s been a while since I really looked at cutting edge NLP, so I’m glad I decided to read this.

Otherwise, here’s some other stuff I’m interested in reading:

“The Geometry of Truth: Emergent Linear Structure in Large Language Model Representations of True/False Datasets” - I’m most interested in the authors’ direct model editing to change whether a model “thinks” a statement is true

As part of my job, I’m starting to learn about normalizing and equivariant flows to model many-body physical systems. No paper names here however